How Google Analytics Kills Great Blogs

If you remember the Animalz blog for just one thing, let it be this: The oversimplification of content marketing is a drag on the entire industry. A nuanced understanding of content marketing strategy, creation, and measurement is the thing that separates great blogs from the rest.

We’ve previously discussed oversimplification in the context of growth constraints. Content is most often used to increase top-of-funnel traffic—even if that isn’t the constraint on growth. We’ve also covered it in the context of timing, i.e., how do you write for topics that are trending up vs. down?

In these cases (and dozens of others), success is determined long before a single word is written, optimized, or shared. Content “works” only if the strategy is created to solve a real problem—one whose solution is easily tracked and measured. An oversimplified approach results in noise (and wasted budget) every single time.

In my nearly 10 years in content marketing, I’ve spent countless hours in Google Analytics trying to fine-tune a report that uses multichannel attribution to “prove” once and for all that content marketing is worth the spend. I’ve never managed to do it.

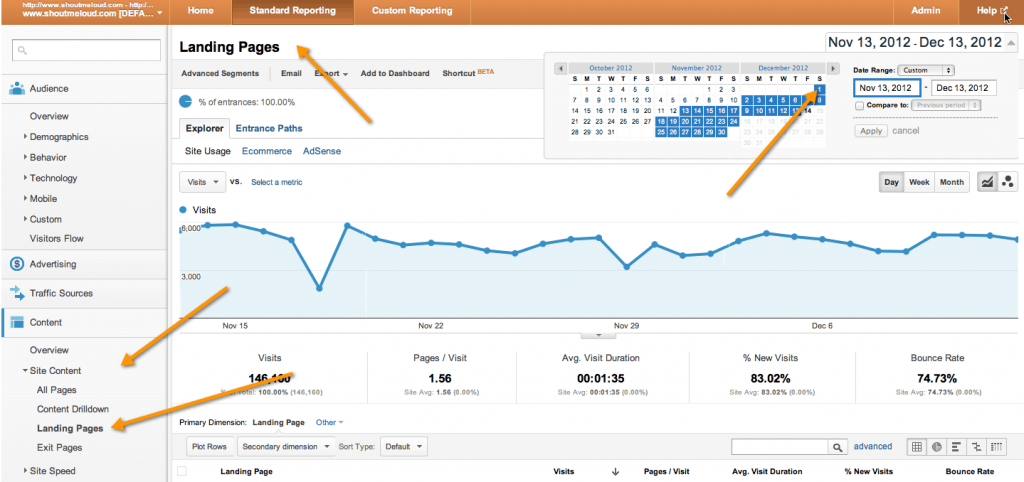

Google Analytics encourages an oversimplified approach to measuring content for three reasons:

- Content marketing ROI is incredibly difficult to measure. The numbers in Google Analytics measure small, specific things, but people tend to believe they are more comprehensive than they actually are.

- It’s free, so it’s used out-of-the-box, without any customization. This emphasizes the metrics that Google chooses to show, not the ones that are actually indicative of your success.

- Metrics are updated in real time, which means people check it often. This shortens the timeline that content marketer’s measure against.

This isn’t really about the problems with Google Analytics; it’s about the desire for near-term ROI that causes marketers to fiddle with strategies that will work, given enough time. We need a better way to measure content marketing.

To really drive this point home, let’s rewind the clock about 70 years. Content marketers, meet George Katona.

What Content Marketers Can Learn From the Consumer Sentiment Index

In 1946, the Federal Reserve approached Dr. George Katona, an economics professor at the University of Michigan, and asked him to help create a survey to measure Americans’ assets.

He took the job but proposed a twist: A trained psychologist, Katona believed that emotion played a key role in the economy, and he was keen to collect data to prove his theory. If he could find a way to measure the way people felt about money, he might be able to predict the way they spent it.

The Fed rejected his idea. After all, how do you measure feelings on a mass scale? But Katona found a way to get the data he needed from the survey anyway. Instead of asking people how they felt about their personal finances or the economy, he probed with broad, general questions like, “Are you better or worse off financially than you were a year ago?” and “Do you think you’ll be better or worse off in a year?” Optimism (or pessimism) turned out to be a really good predictor of where the economy was heading.

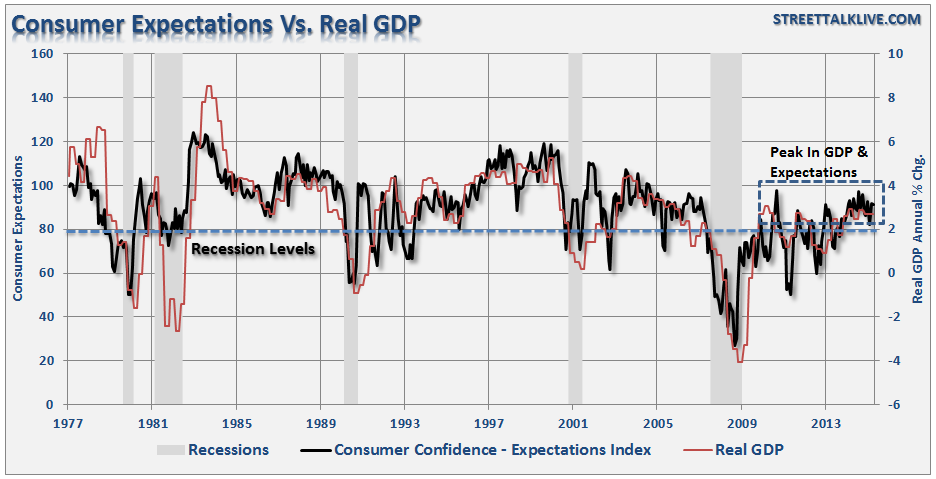

Katona collected this data for years before anyone cared about his “subjective” measurement. But when the idea caught on in the 1970s, he already had decades of data to map against the stock market. So was born the Consumer Sentiment Index.

Amazingly, consumer sentiment dropped—i.e., pessimism increased—just before each recession. The opposite was true, too. As optimism increased, GDP rose. The model worked.

Katona, of course, wasn’t surprised to find that consumers made decisions based on noneconomic motives. The markets rose and fell based on what economist John Maynard Keynes called “animal spirits.” In other words, pure emotion.

Not only did Katona prove that emotion was a driver of economic trends, but he also figured out how to measure it. Decades ago, Katona solved a problem that we in the SaaS marketing world are still wrestling with today. A blog doesn’t grow (or decline) because of raw emotion, but success (or failure) cannot be measured without a holistic set of data points. Google Analytics does not, for example, tell you how people feel about your content and your brand—and that is far more important than page views and bounce rates.

A Better Way to Measure Content Marketing

A few years ago, Moz founder Rand Fishkin published a slide deck that explained Why Content Marketing Fails. He apparently struck a chord—it’s been viewed more than 4.4. million times. The first reason he presents is that stakeholders want to draw a direct line between pageviews and sales. And as any content manager knows, it’s never that simple.

This is exactly the same line of thinking that Katona fought against. He wrote that “traditional economic analysis, not making use of [psychological] survey data, had at its disposal only aggregate data on consumer expenditures . . . and no quantitative data at all on economic motives or expectations.”

Economists before him measured the data they had—and it was incomplete. Marketers today follow the same path. They analyze the data they have, most often Google Analytics, without considering the metrics they haven’t yet measured.